An Old New Upgrade to My K8s

Intro

I have had a little kubernetes cluster up and running for some time on a collection of Odroid MC1’s and an N2. However, recently (I mean not so recently now but for the sake of the story) I attempted to update it and all went wrong, I got rancher set up on it but nothing, and I mean NOTHING, is compiled for armhv7. So, I gave up. I’ve actually now put linux mint on the N2 and use it as a home theater PC which works quite well.

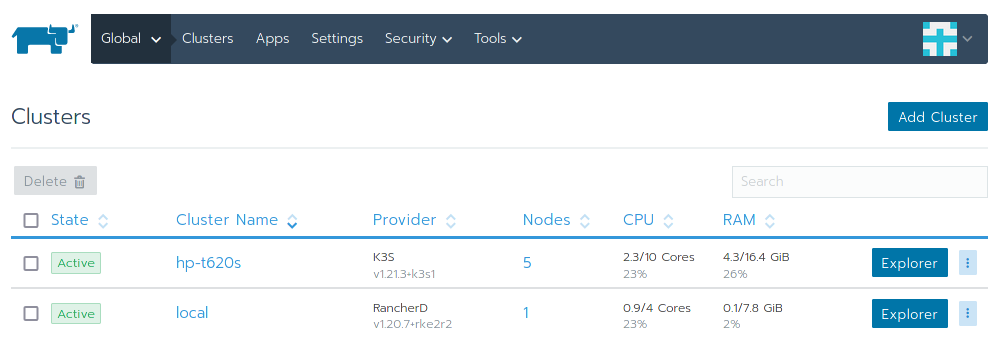

After some time, and lots of forgetting, I came across an auction (for the locals Mason Grey Strange) that runs once a month selling old and new IT equipment. In the auction I found a set of HP-t620s which were being sold in lots of 5 for pittance. Seeing them reminded me of my original cluster so I bought them to replace it.

The Setup

Unfortunately, I didn’t actually record the process of setting up rancher, however, given that it was being set up on standard x86_64 architecture one could pick any of the myriad of guides for setting up rancher (which I set up in a VM on my server) with a k3s cluster (the t620s), which is what I did.

It’s Use

For a period of time I didn’t set up anything on it. I did integrate it with my gitlab server, but integration was as far as I took it, I didn’t bother with setting up any CI/CD related things.

However, I quickly realised that using it to replace my existing CI/CD solution for the couple of projects I’ve got it set up on would be a fun little undertaking at probably simplify things for me.

As an aside, I’m yet to put much thought into security for my server or the applications I run on it, I use basic best practice however, my old CI/CD solution broke most of them, and more. So using the k3s cluster was definitely an upgrade there.

As such, that is what I did.

Not only is the API for this website running on it, all my gitlab runners run on it now. The setup was very simple, with most of it being standard k3s/k8s and gitlab integration configuration. All in all it greatly simplified my gitlab setup and I’m very happy with it.

When I started writing this I had a much grander vision for the end result, however, it really isn’t that grand. The final solution for the CI/CD can be found here. Although I don’t use the project any more it contains all of the CI/CD config required, some of which is outlined below. Using helm simplified things greatly.

Extras

I don’t have a comprehensive list of useful things and stuff I did while implementing all of this. I did it some time ago and didn’t note much of it down. However, I will try and list of some of the bits and pieces I have.

Deployment.yaml

This is used by helm as part of the CI/CD pipeline for deploying the app (I believe, it’s been a while and I could be wrong).

apiVersion: apps/v1

kind: Deployment

metadata:

name: simple-blog-api

labels:

app: python

spec:

replicas: 3

selector:

matchLabels:

app: python

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 33%

template:

metadata:

labels:

app: python

spec:

containers:

- name: python

image: inkletblot/simple-blog-api:<VERSION>

ports:

- containerPort: 5000

livenessProbe:

httpGet:

path: /posts

port: 5000

initialDelaySeconds: 2

periodSeconds: 2

readinessProbe:

httpGet:

path: /posts

port: 5000

initialDelaySeconds: 2

periodSeconds: 2

gitlab-ci.yml

This is the gitlab-ci configuration I’m using. It integrates with docker (as I couldn’t get a local registry working with my current setup). I’ll be entirely honest here, I don’t exactly know how the helm integration works, I believe it works magically in the background using the variables supplied to the pipeline through gitlab.

image: docker:20.10.5

stages:

- build

- push

- deploy

.docker_before_script:

before_script:

- echo -n $CI_REGISTRY_TOKEN | docker login -u

"$CI_REGISTRY_USER" --password-stdin $CI_REGISTRY

build:

extends: .docker_before_script

stage: build

tags:

- docker

script:

- docker pull $CI_REGISTRY_IMAGE:latest || true

- docker build --cache-from

$CI_REGISTRY_IMAGE:latest --tag

$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA .

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

# Tag the "master" branch as "latest"

push latest:

extends: .docker_before_script

stage: push

tags:

- docker

script:

- docker pull $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

- docker tag $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

$CI_REGISTRY_IMAGE:latest

- docker push $CI_REGISTRY_IMAGE:latest

only:

- master

# Docker tag any Git tag

push tag:

extends: .docker_before_script

stage: push

tags:

- docker

only:

- tags

script:

- docker pull $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

- docker tag $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

$CI_REGISTRY_IMAGE:$CI_COMMIT_REF_SLUG

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_SLUG

# Docker Deploy

# Not currently in use, here for posterity.

# deploy:

# extends: .docker_before_script

# stage: deploy

# tags:

# - docker

# environment:

# name: Production

# url: "$LIVE_SERVER_FQDN"

# before_script:

# - 'command -v ssh-agent >/dev/null || ( apt-get update -y && apt-get install openssh-client -y)'

# - eval $(ssh-agent -s)

# - echo "$SSH_PRIVATE_KEY" | tr -d '\r' | ssh-add -

# - mkdir -p ~/.ssh

# - chmod 700 ~/.ssh

# - '[[ -f /.dockerenv ]] && echo -e "Host*\n\tStrictHostKeyChecking no\n\n" >> ~/.ssh/config'

# script:

# - echo 'sed 's/"$CI_REGISTRY_IMAGE".*/ "$CI_REGISTRY_IMAGE":"$CI_COMMIT_SHA"''

# - ssh -J "$PROD_SERVER_USER"@"$LIVE_SERVER_FQDN"

# "$PROD_SERVER_USER"@"$PROD_SERVER_LOCAL_HOST_NAME" "cd simple-blog-api && sed

# -i 's/simple-blog-api.*/ simple-blog-api:"$CI_COMMIT_SHA"\x27/'

# docker-compose.yml && docker-compose up -d --remove-orphans --force-recreate"

# Helm Deploy

# Currently in use, works with *Magic*

deploy:

stage: deploy

image:

name: alpine/helm:latest

entrypoint: [""]

script:

- helm upgrade

--install

--wait

--set image.tag=${CI_COMMIT_SHA}

simple-blog-api-${CI_COMMIT_REF_SLUG}

devops/simple-blog-api

environment:

name: production

That is about all I can come up with at the moment. I hope it is useful for someone.

-ink